Three weeks ago, I reviewed the first half of Utilitarianism for and against. This week I’ll be reviewing the second half, the against side. I should note that I’m a utilitarian and therefore likely to be biased against the arguments presented here. If my criticism is rather thicker than last week, it is not because the author of the second essay is any worse than the first.

The author is one Sir Bernard Williams. According to his Wikipedia, he was a particularly humanistic philosopher in the old Greek mode. He was skeptical of attempts to build an analytical foundation for moral philosophy and of his own prowess in arguments. It seems that he had something pithy or cutting to say about everything, which made him notably cautious of pithy or clever answers. He’s also described as a proto-feminist, although you wouldn’t know it from his writing.

Williams didn’t write his essay out of a rationalist desire to disprove utilitarianism with pure reason (a concept he seemed every bit as sceptical of as Smart was). Instead, Williams wrote this essay because he agrees with Smart that utilitarianism is a “distinctive way of looking at human action and morality”. It’s just that unlike Smart, Williams finds the specific distinctive perspective of utilitarianism often horrible.

Smart anticipated this sort of reaction to his essay. He himself despaired of finding a single ethical system that could please anyone, or even please a single person in all their varied moods.

One of the very first things I noticed in Williams’ essay was the challenge of attacking utilitarianism on its own terms. To convince a principled utilitarian that utilitarianism is a poor choice of ethical system, it is almost always necessary to appeal to the consequences of utilitarianism. This forces any critic to frame their arguments a certain way, a way which might feel unnatural. Or repugnant.

Williams begins his essay proper with (appropriately) a discussion of consequences. He points out that it is difficult to hold actions as valuable purely by their consequences because this forces us to draw arbitrary lines in time and declare the state of the world at that time the “consequences”. After all, consequences continue to unfold forever (or at least, until the heat death of the universe). To have anything to talk about at all Williams decides that it is not quite consequences that consequentialism cares about, but states of affairs.

Utilitarianism is the form of consequentialism that has happiness as its sole important value and seeks to bring about the state of affairs with the most happiness. I like how Williams undid the begging the question that utilitarianism commonly does. He essentially asks ‘why should happiness be the only thing we treat as intrinsically valuable?’ Williams mercifully didn’t drive this home, but I was still left with uncomfortable questions for myself.

Instead he moves on to his first deep observation. You see, if consequentialism was just about valuing certain states of affairs more than others, you could call deontology a form of consequentialism that held that duty was the only intrinsically valuable thing. But that can’t be right, because deontology is clearly different from consequentialism. The distinction, that Williams suggests is that consequentialists discount the possibility of actions holding any inherent moral weight. For a consequentialist, an action is right because it brings about a better state of affairs. For non-consequentialists, a state of affairs can be better – even if it contains less total happiness or integrity or whatever they care about than a counterfactual state of affairs given a different action – because the right action was taken.

A deontologist would say that it is right for someone to do their duty in a way that ends up publically and spectacularly tragic, such that it turns a thousand people off of doing their own duty. A consequentialist who viewed duty as important for the general moral health of society – who, in Smart’s terminology, viewed acting from duty as good – would disagree.

Williams points out that this very emphasis on comparing states of affairs (so natural to me) is particularly consequentialist and utilitarian. That is to say, it is not particularly meaningful for a deontologist or a virtue ethicist to compare states of affairs. Deontologists have no duty to maximize the doing of duty; if you ask a deontologist to choose between a state of affairs that has one hundred people doing their duty and another that has a thousand, it’s not clear that either state is preferable from their point of view. Sure, deontologists think people should do their duty. But duty embodied in actions is the point, not some cosmic tally of duty.

Put as a moral statement, non-consequentialists lack any obligation to bring about more of what they see as morally desirable. A consequentialist may feel both fondness for and a moral imperative to bring about a universe where more people are happy. Non- consequentialists only have the fondness.

One deontologist of my acquaintance said that trying to maximize utility felt pointless – they viewed it as morally important as having a high score on a Tetris game. We ended up starting at each other in blank incomprehension.

In Williams’ view, rejection of consequentialism doesn’t necessarily lead to deontology, though. He sums it up simply as: “all that is involved… in the denial of consequentialism, is that with respect to some type of action, there are some situations in which that would be the right thing to do, even though the state of affairs produced by one’s doing that would be worse than some other state of affairs accessible to one.”

A deontologist will claim right actions must be taken no matter the consequences, but to be non-consequentalist, an ethical system merely has to claim that some actions are right despite a variety of more or less bad consequences that might arise from them.

Or, as I wrote angrily in the margins: “ok, so not necessarily deontology, just accepting sub-maximal global utility”. It is hard to explain to a non-utilitarian just how much this bugs me, but I’m not going to go all rationalist and claim that I have a good reason for this belief.

Williams then turns his attention to the ways in which he thinks utilitarianism’s insistency on quantifying and comparing everything is terrible. Williams believes that by refusing to categorically rule any action out (or worse, specifically trying to come up with situations in which we might do horrific things), utilitarianism encourages people – even non-utilitarians who bump into utilitarian thought experiments – to think of things in utilitarian (that is to say, explicitly comparative) terms. It seems like Williams would prefer there to be actions that are clearly ruled out, not just less likely to be justified.

I get the impression of a man almost tearing out his hair because for him, there exist actions that are wrong under all circumstances and here we are, talking about circumstances in which we’d do them. There’s a kernel of truth here too. I think there can be a sort of bravado in accepting utilitarian conclusions. Yeah, I’m tough enough that I’d kill one to save one thousand? You wouldn’t? I guess you’re just soft and old-fashioned. For someone who cares as much about virtue as I think Williams does, this must be abhorrent.

I loved how Williams summed this up.

The demand… to think the unthinkable is not an unquestionable demand of rationality, set against a cowardly or inert refusal to follow out one's moral thoughts. Rationality he sees as a demand not merely on him, but on the situations in and about which he has to think; unless the environment reveals minimum sanity, it is insanity to carry the decorum of sanity into it.

For all that I enjoyed the phrasing, I don’t see how this changes anything; there is nothing at all sane about the current world. A life is worth something like $7 million to $9 million and yet can be saved for less than $5000. This planet contains some of the most wrenching poverty and lavish luxury imaginable, often in the very same cities. Where is the sanity? If Williams thinks sane situations are a reasonable precondition to sane action, then he should see no one on earth with a duty to act sanely.

The next topic Williams covers is responsibility. He starts by with a discussion of agent interchangeability in utilitarianism. Williams believes that utilitarianism merely requires someone do the right thing. This implies that to the utilitarian, there is no meaningful difference between me doing the utilitarian right action and you doing it, unless something about me doing it instead of you leads to a different outcome.

This utter lack of concern for who does what, as long as the right thing gets done doesn’t actually seem to absolve utilitarians of responsibility. Instead, it tends to increase it. Williams says that unlike adherents of many ethical systems, utilitarians have negative responsibilities; they are just as much responsible for the things they don’t do as they are for the things they do. If someone has to and no one else will, then you have to.

This doesn’t strike me as that unique to utilitarianism. I was raised Catholic and can attest that Catholics (who are supposed to follow a form of virtue ethics) have a notion of negative responsibility too. Every mass, as Catholics ask forgiveness before receiving the Eucharist they ask God for forgiveness for their sins, in thoughts and words, in what they have done and in what they have failed to do.

Leaving aside whether the concept of negative responsibility is uniquely utilitarian or not, Williams does see problems with it. Negative responsibility makes so much of what we do dependent on the people around us. You may wish to spend your time quietly growing vegetables, but be unable to do so because you have a particular skill – perhaps even one that you don’t really enjoy doing – that the world desperately needs. Or you may wish never to take a life, yet be confronted with a run-away trolley that can only be diverted from hitting five people by pulling the lever that makes it hit one.

This didn’t really make sense to me as a criticism until I learned that Williams deeply cares about people living authentic lives. In both the cases above, authenticity played no role in the utilitarian calculus. You must do things, perhaps things you find abhorrent, because other people have set up the world such that terrible outcomes would happen if you didn’t.

It seems that Williams might consider it a tragedy for someone feel compelled by their ethical system to do something that is inauthentic. I imagine he views this as about as much of a crying waste of human potential as I view the yearly deaths of 429,000 people due to malaria. For all my personal sympathy for him I am less than sympathetic to a view that gives these the same weight (or treats inauthenticity as the greater tragedy).

Radical authenticity requires us to ignore society. Yes, utilitarianism plops us in the middle of a web of dependencies and a buffeting sea of choices that were not ours, while demanding we make the best out of it all. But our moral philosophies surely are among the things that push us towards an authentic life. Would Williams view it as any worse that someone was pulled from her authentic way of living because she would starve otherwise?

To me, there is a certain authenticity in following your ethical system wherever it leads. I find this authenticity beautiful, but not worthy of moral consideration, except insofar as it affects happiness. Williams finds this authenticity deeply important. But by rejecting consequentialism, he has no real way to argue for more of the qualities he desires, except perhaps as a matter of aesthetics.

It seems incredibly counter-productive to me to say to people – people in the midst of a society that relentlessly pulls them away from authenticity with impersonal market forces – that they should turn away from the one ethical system that seems to have as the desired outcome a happier system. A Kantian has her duty to duty, but as long as she does that, she cares not for the system. A virtue ethicist wishes to be virtuous and authentic, but outside of her little bubble of virtue, the terrors go on unabated. It’s only the utilitarian who can holds a better society as an end into itself.

Maybe this is just me failing to grasp non-utilitarian epistemologies. It baffles me to hear “this thing is good and morally important, but it’s not like we think it’s morally important for there to be more of it; that goes too far!”. Is this a strawman? If someone could explain what Williams is getting at here in terms I can understand, I’d be most grateful.

I do think Williams misses one key thing when discussing the utilitarian response to negative responsibility. Actions should be assessed on the margin, not in isolation. That is to say, the marginal effect of someone becoming a doctor, or undertaking some other career generally considered benevolent is quite low if there are others also willing to do the job. A doctor might personally save hundreds, or even thousands of lives over her career, but her marginal impact will be saving something like 25 lives.

The reasons for this are manifold. First, when there are few doctors, they tend to concentrate on the most immediately life-threatening problems. As you add more and more doctors, they can help, but after a certain point, the supply of doctors will outstrip the demand for urgent life-saving attention. They can certainly help with other tasks, but they will each save fewer lives than the first few doctors.

Second, there is a somewhat fixed supply of doctors. Despite many, many people wishing they could be doctors, only so many can get spots in medical school. Even assuming that medical school admissions departments are perfectly competent at assessing future skill at being a doctor (and no one really believes they are), your decision to attend medical school (and your successful admission) doesn’t result in one extra doctor. It simply means that you were slightly better than the next best person (who would have been admitted if you weren’t).

Finally, when you become a doctor you don’t replace one of the worst already practising doctors. Instead, you replace a retiring doctor who is (for statistical purposes) about average for her cohort.

All of this is to say that utilitarians should judge actions on the margin, not in absolute terms. It isn’t that bad (from a utilitarian perspective) not devote all your attentions to the most effective direct work, because unless a certain project is very constrained by the number of people working on it, you shouldn’t expect to make much marginal difference. On the other hand, earning a lot of money and giving it to highly effective charities (or even a more modest commitment, like donating 10% of your income) is likely to do a huge amount of good, because most people don’t do this, so you’re replacing a person at a high paying job who was doing (from a utilitarian perspective) very little good.

Williams either isn’t familiar with this concept, or omitted it in the interest of time or space.

Williams next topic is remoter effects. A remoter effect is any effect that your actions have on the decision making of other people. For example, if you’re a politician and you lie horribly, are caught, and get re-elected by a large margin, a possible remoter effect is other politicians lying more often. With the concept of remoter effects, Williams is pointing at what I call second order utilitarianism.

Williams makes a valid point that many of the justifications from remoter effects that utilitarians make are very weak. For example, despite what some utilitarians claim, telling a white lie (or even telling any lie that is unpublicized) doesn’t meaningfully reduce the propensity of everyone in the world to tell the truth.

Williams thinks that many utilitarians get away with claiming remoter effects as justification because they tend to be used as way to make utilitarianism give the common, respectable answers to ethical dilemmas. He thinks people would be much more skeptical of remoter effects if they were ever used to argue for positions that are uncommonly held.

This point about remoter effects was, I think, a necessary precursor to Williams’ next thought experiment. He asks us to imagine a society with two groups, A and B. There are many more members of A than B. Furthermore, members of A are disgusted by the presence (or even the thought of the presence) of members of group B. In this scenario, there has to exist some level of disgust and some ratio between A and B that makes the clear utilitarian best option relocating all members of group B to a different country.

With Williams’ recent reminder that most remoter effects are weaker than we like to think still ringing in my ears, I felt fairly trapped by this dilemma. There are clear remoter effects here: you may lose the ability to advocate against this sort of ethnic cleansing in other countries. Successful, minimally condemned ethnic cleansing could even encourage copy-cats. In the real world, these are might both be valid rejoinders, but for the purposes of this thought experiment, it’s clear these could be nullified (e.g. if we assume few other societies like this one and a large direct utility gain).

The only way out that Williams sees fit to offer us is an obvious trap. What if we claimed that the feelings of group A were entirely irrational and that they should just learn to live with them? Then we wouldn’t be stuck advocating for what is essentially ethnic cleansing. But humans are not rational actors. If we were to ignore all such irrational feelings, then utilitarianism would no longer be a pragmatic ethical system that interacts with the world as it is. Instead, it would involve us interacting with the world as we wish it to be.

Furthermore, it is always a dangerous game to discount other people’s feelings as irrational. The problem with the word irrational (in the vernacular, not utilitarian sense) is that no one really agrees on what is irrational. I have an intuitive sense of what is obviously irrational. But so, alas, do you. These senses may align in some regions (e.g. we both may view it as irrational to be angry because of a belief that the government is controlled by alien lizard-people), but not necessarily in others. For example, you may view my atheism as deeply irrational. I obviously do not.

Williams continues this critique to point out that much of the discomfort that comes from considering – or actually doing – things the utilitarian way comes from our moral intuitions. While Smart and I are content to discount these feelings, Williams is horrified at the thought. To view discomfort from moral intuitions as something outside yourself, as an unpleasant and irrational emotion to be avoided, is – to Williams – akin to losing all sense of moral identity.

This strikes me as more of a problem for rationalist philosophers. If you believe that morality can be rationally determined via the correct application of pure reason, then moral intuitions must be key to that task. From a materialist point of view though, moral intuitions are evolutionary baggage, not signifiers of something deeper.

Still, Williams made me realize that this left me vulnerable to the question “what is the purpose of having morality at all if you discount the feelings that engender morality in most people?”, a question to which I’m at a loss to answer well. All I can say (tautologically) is “it would be bad if there was no morality”; I like morality and want it to keep existing, but I can’t ground it in pure reason or empiricism; no stone tablets have come from the world. Religions are replete with stone tablets and justifications for morality, but they come with metaphysical baggage that I don’t particularly want to carry. Besides, if there was a hell, utilitarians would have to destroy it.

I honestly feel like a lot of my disagreement with Williams comes from our differing positions on the intuitive/systematizing axis. Williams has an intuitive, fluid, and difficult to articulate sense of ethics that isn’t necessarily transferable or even explainable. I have a system that seems workable and like it will lead to better outcomes. But it’s a system and it does have weird, unintuitive corner cases.

Williams talks about how integrity is a key moral stance (I think motivated by his insistence on authenticity). I agree with him as to the instrumental utility of integrity (people won’t want to work with you or help you if you’re an ass or unreliable). But I can’t ascribe integrity some sort of quasi-metaphysical importance or treat it as a terminal value in itself.

In the section on integrity, Williams comes back to negative responsibility. I do really respect Williams’ ability to pepper his work with interesting philosophical observations. When talking about negative responsibility, he mentions that most moral systems acknowledge some difference between allowing an action to happen and causing it yourself.

Williams believes the moral difference between action and inaction is conceptually important, “but it is unclear, both in itself and in its moral applications, and the unclarities are of a kind which precisely cause it to give way when, in very difficult cases, weight has to be put on it”. I am jealous three times over at this line, first at the crystal-clear metaphor, second at the broadly applicable thought underlying the metaphor, and third at the precision of language with which Williams pulls it off.

(I found Williams a less consistent writer than Smart. Smart wrote his entire essay in a tone of affable explanation and managed to inject a shocking amount of simplicity into a complicated subject. Williams frequently confused me – which I feel comfortable blaming at least in part on our vastly different axioms – but he was capable of shockingly resonant turns of phrase.)

I doubt Williams would be comfortable to come down either way on inaction’s equivalence to action. To the great humanist, it must ultimately (I assume) come down to the individual humans and what they authentically believed. Williams here is scoffing at the very idea of trying to systematize this most slippery of distinctions.

For utilitarians, the absence or presence of a distinction is key to figuring out what they must do. Utilitarianism can imply “a boundless obligation… to improve the world”. How a utilitarian undertakes this general project (of utility maximization) will be a function of how she can affect the world, but it cannot, to Williams, ever be the only project anyone undertakes. If it were the only project, underlain by no other projects, then it will, in Williams words, be “vacuous”.

The utilitarian can argue that her general project will not be the only project, because most people aren’t utilitarian and therefore have their own projects going on. Of course, this only gets us so far. Does this imply that the utilitarian should not seek to convince too many others of her philosophy?

What does it even mean for the general utilitarian project to be vacuous? As best I can tell, what Williams means is that if everyone were utilitarian, we’d all care about maximally increasing the utility of the world, but either be clueless where to start or else constantly tripping over each other (imagine, if you can, millions of people going to sub-Saharan Africa to distribute bed nets, all at the same time). The first order projects that Williams believes must underlay a more general project are things like spending times with friends, or making your family happy. Williams also believes that it might be very difficult for anyone to be happy without some of these more personal projects

I would suggest that what each utilitarian should do is what they are best suited for. But I’m not sure if this is coherent without some coordinating body (i.e. a god) ensuring that people are well distributed for all of the projects that need doing. I can also suppose that most people can’t go that far on willpower. That is to say, there are few people who are actually psychologically capable of working to improve the world in a way they don’t enjoy. I’m not sure I have the best answer here, but my current internal justification leans much more on the second answer than the first.

Which is another way of saying that I agree with Williams; I think utilitarianism would be self-defeating if it suggested that the only project anyone should undertake is improving the world generally. I think a salient difference between us is that he seems to think utilitarianism might imply that people should only work on improving the world generally, whereas I do not.

This discussion of projects leads to Williams talking about the hedonic paradox (the observation that you cannot become happy by seeking out pleasures), although Williams doesn’t reference it by name. Here Williams comes dangerously close to a very toxic interpretation of the hedonic paradox.

Williams believes that happiness comes from a variety of projects, not all of which are undertaken for the good of others or even because they’re particularly fun. He points out that few of these projects, if any, are the direct pursuit of happiness and that happiness seems to involve something beyond seeking it. This is all conceptually well and good, but I think it makes happiness seem too mysterious.

I wasted years of my life believing that the hedonic paradox meant that I couldn’t find happiness directly. I thought if I did the things I was supposed to do, even if they made me miserable, I’d find happiness eventually. Whenever I thought of rearranging my life to put my happiness first, I was reminded of the hedonic paradox and desisted. That was all bullshit. You can figure out what activities make you happy and do more of those and be happier.

There is a wide gulf between the hedonic paradox as originally framed (which is purely an observation about pleasures of the flesh) and the hedonic paradox as sometimes used by philosophers (which treats happiness as inherently fleeting and mysterious). I’ve seen plenty of evidence for the first, but absolutely none for the second. With his critique here, I think Williams is arguably shading into the second definition.

This has important implications for the utilitarian. We can agree that for many people, the way to most increase their happiness isn’t to get them blissed out on food, sex, and drugs, without this implying that we will have no opportunities to improve the general happiness. First, we can increase happiness by attacking the sources of misery. Second, we can set up robust institutions that are conducive to happiness. A utilitarian urban planner would perhaps give just as much thought to ensuring there are places where communities can meet and form as she would to ensuring that no one would be forced to live in squalor.

Here’s where Williams gets twisty though. He wanted us to come to the conclusion that a variety of personal projects are necessary for happiness so that he could remind us that utilitarianism’s concept of negative responsibility puts great pressure on an agent not to have her own personal projects beyond the maximization of global happiness. The argument here seems to be (not for the first time) that utilitarianism is self-defeating because it will make everyone miserable if everyone is a utilitarian.

Smart tried to short-circuit arguments like this by pointing out that he wasn’t attempting to “prove” anything about the superiority of utilitarianism, simply presenting it as an ethical system that might be more attractive if it was better understood. Faced with Williams point here, I believe that Smart would say that he doesn’t expect everyone to become utilitarian and that those who do become utilitarian (and stay utilitarian) are those most likely to have important personal projects that are generally beneficent.

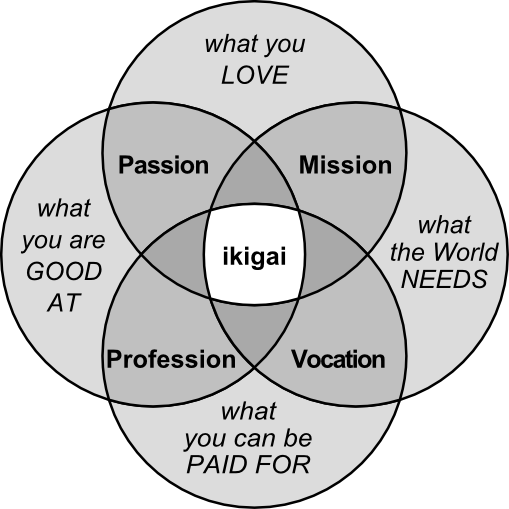

I have the pleasure of reading the blogs and Facebook posts of many prominent (for certain unusual values of prominent) utilitarians. They all seem to be enjoying what they do. These are people who enjoy research, or organizing, or presenting, or thought experiments and have found ways to put these vocations to use in the general utilitarian project. Or people who find that they get along well with utilitarians and therefore steer their career to be surrounded by them. This is basically finding ikigai within the context of utilitarian responsibilities.

Saying that utilitarianism will never be popular outside of those suited for it means accepting we don’t have a universal ethical solution. This is, I think, very pragmatic. It also doesn’t rule out utilitarians looking for ways we can encourage people to be more utilitarian. To slightly modify a phrase that utilitarian animal rights activists use: the best utilitarianism is the type you can stick with; it’s better to be utilitarian 95% of the time then it is to be utilitarian 100% of the time – until you get burnt out and give it up forever.

I would also like to add a criticism of Williams’ complaint that utilitarian actions are overly determined by the actions of others. Namely, the status quo certainly isn’t perfect. If we are to reject action because it is not on the projects we would most like to be doing, then we are tacitly endorsing the status quo. Moral decisions cannot be made in a vacuum and the terrain in which we must make moral decisions today is one marked by horrendous suffering, inequality, and unfairness.

The next two sections of Williams’ essay were the most difficult to parse, but also the most rewarding. They deal with the interplay between calculating utilities and utilitarianism and question the extent to which utilitarianism is practical outside of appealing to the idea of total utility. That is to say, they ask if the unique utilitarian ethical frame can, under practical conditions have practical effects.

To get to the meat of Williams points, I had to wade through what at times felt like word games. All of the things he builds up to throughout these lengthy sections begin with a premise made up of two points that Williams thinks are implied by Smart’s essay.

- All utilities should be assessed in terms of acts. If we're talking about rules, governments, or dispositions, their utility stems from the acts they either engender or prevent.

- To say that a rule (as an example) has any effect at all, we must say that it results in some change in acts. In Williams' words: "the total utility effect of a rule's obtaining must be cashable in terms of the effects of acts.

Together, (1) and (2) make up what Williams calls the “act-adequacy” premise. If the premise is true, there must be no surplus source of utility outside of acts and, as Smart said, rule utilitarianism should (if it is truly concerned with optimific outcomes) collapse to act utilitarianism. This is all well and good when comparing systems as tools of total assessment (e.g. when we take the universe wide view that I criticized Smart for hiding in), but Williams is first interested in how this causes rule and act utilitarianism to relate with actions

If you asked an act-utilitarian and a rule utilitarian “what makes that action right”, they would give different answers. The act utilitarian would say that it is right if it maximizes utility, but the rule utilitarian would say it is right if it is in accordance with rules that tend to maximize utility. Interestingly, if the act-adequacy premise is true, then both act and rule utilitarians would agree as to why certain rules or dispositions are desirable, namely, that actions that results from those rules or dispositions tends to maximize utility.

(Williams also points out that rules, especially formal rules, may derive utility from sources other than just actions following the rule. Other sources of utility include: explaining the rule, thinking about the rule, avoiding the rule, or even breaking the rule.)

But what to do we do when actually faced with the actions that follow from a rule or disposition? Smart has already pointed out that we should praise or blame based on the utility of the praise/blame, not on the rightness or wrongness of the action we might be praising.

In Williams’ view, there are two problems with this. First, it is not a very open system. If you knew someone was praising or blaming you out of a desire to manipulate your future actions and not in direct relation to their actual opinion of your past actions, you might be less likely to accept that praise or blame. Therefore, it could very well be necessary for the utilitarian to hide why acts are being called good or bad (and therefore the reasons why they praise or blame).

The second problem is how this suggests utilitarians should stand with themselves. Williams acknowledges that utilitarians in general try not to cry over spilt milk (“[this] carries the characteristically utilitarian thought that anything you might want to cry over is, like milk, replaceable”), but argues that utilitarianism replaces the question of “did I do the right thing?” with “what is the right thing to do?” in a way that may not be conducive to virtuous thought.

(Would a utilitarian Judas have lived to old age contentedly, happy that he had played a role in humankind’s eternal salvation?)

The answer to “what is the right thing to do?” is of course (to the utilitarian) “that which has the best consequences”. Except “what is the right thing to do?” isn’t actually the right question to ask if you’re truly concerned with the best consequences. In that case, the question is “if asking this question is the right thing to do, what actions have the best consequences?”

Remember, Smart tried to claim that utilitarianism was to only be used for deliberative actions. But it is unclear which actions are the right ones to take as deliberative, especially a priori. Sometimes you will waste time deliberating, time that in the optimal case you would have spent on good works. Other times, you will jump into acting and do the wrong thing.

The difference between act (direct) and rule (indirect) utilitarianism therefore comes to a question of motivation vs. justification. Can a direct utilitarian use “the greatest total good” as a motivation if they do not know if even asking the question “what will lead to the greatest total good?” will lead to it? Can it only ever be a justification? The indirect utilitarian can be motivated by following a rule and justify her actions by claiming that generally followed, the rule leads to the greatest good, but it is unclear what recourse (to any direct motivation for a specific action) the direct utilitarian has.

Essentially, adopting act utilitarianism requires you to accept that because you have accepted act utilitarianism you will sometimes do the wrong thing. It might be that you think that you have a fairly good rule of thumb for deliberating, such that this is still the best of your options to take (and that would be my defense), but there is something deeply unsettling and somewhat paradoxical about this consequence.

Williams makes it clear that the bad outcomes here aren’t just loss of an agent’s time. This is similar in principle to how we calculate the total utility of promulgating a rule. We accept that the total effects of the promulgation must include the utility or disutility that stems from avoiding it or breaking it, in addition to the utility or disutility of following. When looking at the costs of deliberation, we should also include the disutility that will sometimes come when we act deliberately in a way that is less optimific than we would have acted had we spontaneously acted in accordance with our disposition or moral intuitions.

This is all in the case where the act-adequacy premise is true. If it isn’t, the situation is more complex. What if some important utility of actions comes from the mood they’re done in, or in them being done spontaneously? Moods may be engineered, but it is exceedingly hard to engineer spontaneity. If the act-adequacy premise is false, then it may not hold that the (utilitarian) best world is one in which right acts are maximized. In the absence of the act-adequacy premise it is possible (although not necessarily likely) that the maximally happy world is one in which few people are motivated by utilitarian concerns.

Even if the act-adequacy premise holds, we may be unable to know if our actions are at all right or wrong (again complicating the question of motivation).

Williams presents a thought experiment to demonstrate this point. Imagine a utilitarian society that noticed its younger members were liable to stray from the path of utilitarianism. This society might set up a Truman Show-esque “reservation” of non-utilitarians, with the worst consequences of their non-utilitarian morality broadcasted for all to see. The youth wouldn’t stray and the utility of the society would be increased (for now, let’s beg the question of utilitarianism as a lived philosophy being optimific).

Here, the actions of the non-utilitarian holdouts would be right; on this both utilitarians (looking from a far enough remove) and the subjects themselves would agree. But this whole thing only works if the viewers think (incorrectly) that the actions they are seeing are wrong.

From the global utilitarian perspective, it might even be wrong for any of the holdouts to become utilitarian (even if utilitarianism was generally the best ethical system). If the number of viewers is large enough and the effect of one fewer irrational holdout is strong enough (this is a thought experiment, so we can fiddle around with the numbers such that this is indeed true), the conversion of a hold-out to utilitarianism would be really bad.

Basically, it seems possible for there to be a large difference between the correct action as chosen by the individual utilitarian with all the knowledge she has and the correct action as chosen from the perspective of an omniscient observer. From the “total assessment” perspective, it is even possible that it would be best that there be no utilitarians.

Williams points out that many of the qualities we value and derive happiness from (stubborn grit, loyalty, bravery, honour) are not well aligned with utilitarianism. When we talked about ethnic cleansing earlier, we acknowledged that utilitarianism cannot distinguish between preferences people have and the preferences people should have; both are equally valid. With all that said, there’s a risk of resolving the tension between non-utilitarian preferences and the joy these preferences can bring people by trying to shape the world not towards maximum happiness, but towards the happiness easiest to measure and most comfortable to utilitarians.

Utilitarianism could also lead to disutility because of the game theoretic consequences. On international projects or projects between large groups of people, sanctioning other actors must always be an option. Without sanctioning, the risk of defection is simply too high in many practical cases. But utilitarians are uniquely compelled to sanction (or else surrender).

If there is another group acting in an uncooperative or anti-utilitarian matter, the utilitarians must apply the least terrible sanction that will still be effective (as the utility of those they’re sanctioning still matters). The other group will of course know this and have every incentive to commit to making any conflict arising from the sanction so terrible as to make any sanctioning wrong from a utilitarian point of view. Utilitarians now must call the bluff (and risk horrible escalating conflict), or else abandon the endeavour.

This is in essence a prisoner’s dilemma. If the non-utilitarians carry on without being sanctioned, or if they change their behaviour in response to sanctions without escalation, everyone will be better off (then in the alternative). But if utilitarians call the bluff and find it was not a bluff, then the results could be catastrophic.

Williams seems to believe that utilitarians will never include an adequate fudge factor for the dangers of mutual defecting. He doesn’t suggest pacifism as an alternative, but he does believe that violent sanctioning should always be used at a threshold far beyond where he assesses the simple utilitarian one to lie.

This position might be more of a historical one, in reaction to the efficiency, order, and domination obsessed Soviet Communism (and its Western fellow travelers), who tended towards utilitarian justifications. All of the utilitarians I know are committed classical liberals (indeed, it sometimes seems to me that only utilitarians are classic liberals these days). It’s unclear if Williams’ criticism can be meaningfully applied to utilitarians who have internalized the severe detriments of escalating violence.

While it seems possible to produce a thought experiment where even such committed second order utilitarians would use the wrong amount of violence or sanction too early, this seems unlikely to come up in a practical context – especially considering that many of the groups most keen on using violence early and often these days aren’t in fact utilitarian. Instead it’s members of both the extreme left and right, who have independently – in an amusing case of horseshoe theory – adopted a morality based around defending their tribe at all costs. This sort of highly local morality is anathema to utilitarians.

Williams didn’t anticipate this shift. I can’t see why he shouldn’t have. Utilitarians are ever pragmatic and (should) understand that utilitarianism isn’t served by starting horrendous wars willy-nilly.

Then again, perhaps this is another harbinger of what Williams calls “utilitarianism ushering itself from the scene”. He believes that the practical problems of utilitarian ethics (from the perspective of an agent) will move utilitarianism more and more towards a system of total assessment. Here utilitarianism may demand certain things in the way of dispositions or virtues and certainly it will ask that the utility of the world be ever increased, but it will lose its distinctive character as a system that suggests actions be chosen in such a way as to maximize utility.

Williams calls this the transcendental viewpoint and pithily asks “if… utilitarianism has to vanish from making any distinctive mark in the world, being left only with the total assessment from the transcendental standpoint – then I leave if for discussion whether that shows that utilitarianism is unacceptable or merely that no one ought to accept it.”

This, I think, ignores the possibility that it might become easier in the future to calculate the utility of certain actions. The results of actions are inherently chaotic and difficult to judge, but then, so is the weather. Weather prediction has been made tractable by the application of vast computational power. Why not morality? Certainly, this can’t be impossible to envision. Iain M. Banks wrote a whole series of books about it!

Of course, if we wish to be utilitarian on a societal level, we must currently do so without the support of godlike AI. Which is what utilitarianism was invented for in the first place. Here it was attractive because it is minimally committed – it has no elaborate theological or philosophical commitments buttressing it, unlike contemporaneous systems (like Lockean natural law). There is something intuitive about the suggestion that a government should only be concerned for the welfare of the governed.

Sure, utilitarianism makes no demands on secondary principles, Williams writes, but it is extraordinarily demanding when it comes to empirical information. Utilitarianism requires clear, comprehensible, and non-cyclic preferences. For any glib rejoinders about mere implementation details, Williams has this to say:

[These problems are] seen in the light of a technical or practical difficulty and utilitarianism appeals to a frame of mind in which technical difficulty, even insuperable technical difficulty, is preferable to moral unclarity, no doubt because it is less alarming.

Williams suggests that the simplicity of utilitarianism isn’t a virtue, only indicative of “how little of the world’s luggage it is prepared to pick up”. By being immune to concerns of justice or fairness (except insofar as they are instrumentally useful to utilitarian ends), Williams believes that utilitarianism fails at many of the tasks that people desire from a government.

Personally, I’m not so sure a government commitment to fairness or justice is at all illuminating. There are currently at least two competing (and mutually exclusive) definitions of both fairness and justice in political discourse.

Should fairness be about giving everyone the same things? Or should it be about giving everyone the tools they need to have the same shot at meaningful (of course noting that meaningful is a societal construct) outcomes? Should justice mean taking into account mitigating factors and aiming for reconciliation? Or should it mean doing whatever is necessary to make recompense to the victim?

It is too easy to use fairness or justice as a sword without stopping to assess who it aimed at and what the consequences of the aim is (says the committed consequentialist). Fairness and justice are meaty topics that deserve better than to be thrown around as a platitudinous counterargument to utilitarianism.

A much better critique of utilitarian government can be made by imagining how such a government would respond to non-utilitarian concerns. Would it ignore them? Or would it seek to direct its citizens to have only non-utilitarian concerns? The latter idea seems practically impossible. The first raises important questions.

Imagine a government that is minimally responsive to non-utilitarian concerns. It primarily concerns itself with maximizing utility, but accepts the occasional non-utilitarian decision as the cost it must pay to remain in power (presume that the opposition is not utilitarian and would be very responsive to non-utilitarian concerns in a way that would reduce the global utility). This government must necessarily look very different to the utilitarian elite who understand what is going on and the masses who might be quite upset that the government feels obligated to ignore many of their dearly held concerns.

Could such an arrangement exist with a free media? With free elections? Democracies are notably less corrupt than autocracies, so there are significant advantages to having free elections and free media. But how, if those exist, does the utilitarian government propose to keep its secrets hidden from the population? And if the government was successful, how could it respect its citizens, so duped?

In addition to all that, there is the problem of calculating how to satisfy people’s preferences. Williams identifies three problems here:

- How do you measure individual welfare?

- To what extent is welfare comparative?

- How do you develop the aggregate social preference given the answer to the proceeding two questions?

Williams seems to suggest that a naïve utilitarian approach involves what I’ve think is best summed up in a sick parody of Marx: from each according to how little they’ll miss it, to each according to how much they desire it. Surely there cannot be a worse incentive structure imaginable than the one naïve utilitarianism suggests?

When dealing with preferences, it is also the case that utilitarianism makes no distinction between fixing inequitable distributions that cause discontent or – as observed in America – convincing those affected by inequitable distributions not to feel discontent.

More problems arise around substitution or compensation. It may be more optimific for a roadway to be built one way than another and it may be more optimific for compensation to be offered to those who are affected, but it is unclear that the compensation will be at all worth it for those affected (to claim it would be, Williams declares, is “simply an extension of the dogma that every man has his price”). This is certainly hard for me to think about, even (or perhaps especially) because the common utilitarian response is a shrug – global utility must be maximized, after all.

Utilitarianism is about trade-offs. And some people have views which they hold to be beyond all trade-off. It is even possible for happiness to be buttressed or rest entirely upon principles – principles that when dearly and truly held cannot be traded-off against. Certainly, utilitarians can attempt to work around this – if such people are a minority, they will be happily trammelled by a utilitarian majority. But it is unclear what a utilitarian government could do in a such a case where the majority of their population is “afflicted” with deeply held non-utilitarian principles.

Williams sums this up as:

Perhaps humanity is not yet domesticated enough to confine itself to preferences which utilitarianism can handle without contradiction. If so, perhaps utilitarianism should lope off from an unprepared mankind to deal with problems it finds more tractable – such as that present by Smart… of a world which consists only of a solitary deluded sadist.

Finally, there’s the problem of people being terrible judges of what they want, or simply not understanding the effects of their preferences (as the Americas who rely on the ACA but want Obamacare to be repealed may find out). It is certainly hard to walk the line between respecting preferences people would have if they were better informed or truly understood the consequences of their desires and the common (leftist?) fallacy of assuming that everyone who held all of the information you have must necessarily have the same beliefs as you.

All of this combines to make Williams view utilitarianism as dangerously irresponsible as a system of public decision making. It assumes that preferences exist, that the method of collecting them doesn’t fail to capture meaningful preferences, that these preferences would be vindicated if implemented, and that there’s a way to trade-off among all preferences.

To the potential utilitarian rejoinder that half a loaf is better than none, he points out a partial version of utilitarianism is very vulnerable to the streetlight effect. It might be used where it can and therefore act to legitimize – as “real”– concerns in the areas where it can be used and delegitimize those where it is unsuitable. This can easily lead to the McNamara fallacy; deliberate ignorance of everything that cannot be quantified:

The first step is to measure whatever can be easily measured. This is OK as far as it goes. The second step is to disregard that which can't be easily measured or to give it an arbitrary quantitative value. This is artificial and misleading. The third step is to presume that what can't be measured easily really isn't important. This is blindness. The fourth step is to say that what can't be easily measured really doesn't exist. This is suicide. — Daniel Yankelovich "Corporate Priorities: A continuing study of the new demands on business." (1972)

This isn’t even to mention something that every serious student of economics knows: that when dealing with complicated, idealized systems, it is not necessarily the non-ideal system that is closest to the ideal (out of all possible non-ideal systems) that has the most benefits of the ideal. Economists call this the “theory of the second best”. Perhaps ethicists might call it “common sense” when applied to their domain?

Williams ultimately doubts that systematic though is at all capable of dealing with the myriad complexities of political (and moral) life. He describes utilitarianism as “having too few thoughts and feelings to match the world as it really is”.

I disagree. Utilitarianism is hard, certainly. We do not agree on what happiness is, or how to determine which actions will most likely bring it, fine. Much of this comes from our messy inbuilt intuitions, intuitions that are not suited for the world as it now is. If utilitarianism is simple minded, surely every other moral system (or lack of system) must be as well.

In many ways, Williams did shake my faith in utilitarianism – making this an effective and worthwhile essay. He taught me to be fearful of eliminating from consideration all joys but those that the utilitarian can track. He drove me to question how one can advocate for any ethical system at all, denied the twin crutches of rationalism and theology. And he further shook my faith in individuals being able to do most aspects of the utilitarian moral calculus. I think I’ll have more to say on that last point in the future.

But by their actions you shall know the righteous. Utilitarians are currently at the forefront of global poverty reduction, disease eradication, animal suffering alleviation, and existential risk mitigation. What complexities of the world has every other ethical system missed to leave these critical tasks largely to utilitarians?

Williams gave me no answer to this. For all his beliefs that utilitarianism will have dire consequences when implemented, he has no proof to hand. And ultimately, consequences are what you need to convince a consequentialist.